Experts have warned how fraudsters are increasingly using AI to steal people’s identities – and voices – in a bid to trick thousands out of pocket.

It’s a scam that is increasingly prevalent as artificial intelligence has become readily available, often for free, with it being just another tool in their arsenal to cunningly con innocent, and often vulnerable, people. Cybersecurity experts have raised concerns about crooks stealing people’s information from their social media accounts, only to impersonate them to fool others.

A new Channel 5 series Identity Theft: Don’t Get Caught Out, which continues tonight, examines how criminals are using the advanced technology to create new scams. It hears from a woman who was tricked into thinking her daughter had been kidnapped, as criminals used it to clone her voice.

Another victim was robbed of more than £1000 of tech, as they used an app pretending to purchase an iPad from her, while another discusses how she lost control of her social media accounts to a hacker.

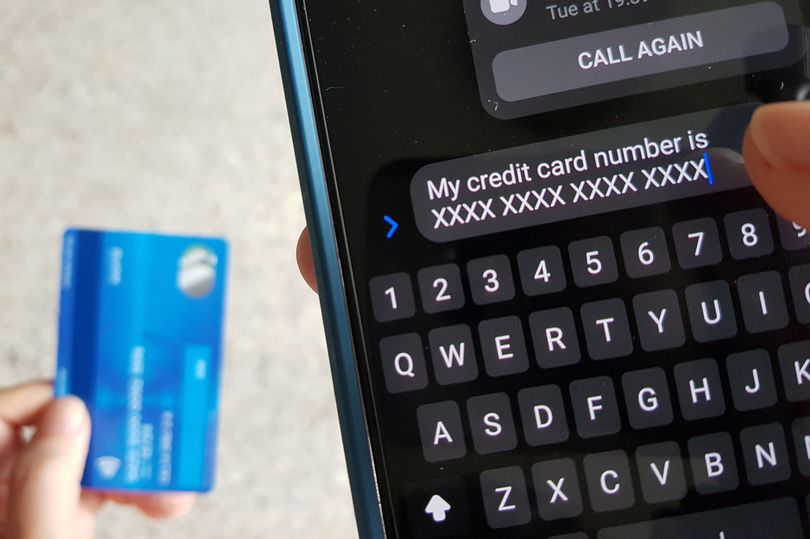

Online scam victim sending credit number to scammer

While legitimate organisations are using AI to scale up their ability to respond to customers, often in a bid to be able to resolve simple issues quickly, the same applies to criminals wanting to expand their operations. Daniel Prince, a professor of cyber security at Lancaster University, told the Mirror: “This ability to ‘scale up’ is one aspect criminals are using generative AI technologies for.

“It enables them to trawl large numbers of individuals in a plausible way until they get someone to respond and then they can hand them over to a real human to drive forward the fraud. We have seen this approach before, and anyone looking at their email inbox or spam folder can attest to the number of automated scam messages they receive.

“However, the use of generative AI goes beyond this type of automation of criminal activity. It affords the criminals the opportunity to construct fake ‘models’ of real people to enhance their criminal activity.”

Professor Prince argues it can be straightforward for criminals to construct models of individuals by using software which can respond over text messages like a known person, construct audio that sounds like someone and even generate video and pictures.

“These models are trained on publicly available information about people, typically from social media,” he warned. “Text, audio, images and video can all be harvested from social media accounts if they are open, or if their connections and friends’ accounts have been compromised.

“And using AI it often does not take much information to create something plausible. Once these models are trained, it is then possible to construct media to use when reaching out to a potential target to try and get them to engage. But a common ploy is to try and create a high-pressure or concerning situation, such as a loved one needing help, or an authoritative figure or organisation demanding action.”

The expert warns the same scenario can also apply in a professional setting, where they typically involve emails sent allegedly from a senior figure in the organisation asking for a change in payment details for example. It can be even more convincing if a voicemail is left.

Dr Katie Paxton-Fear, lecturer in cyber security at Manchester Metropolitan University, says she has seen a range of different attacks to steal identities. Just one instance is when a criminal duplicates a Facebook account – whereby pictures are cloned, along with posts.

They then contact friends saying they have been hacked and that this is the new account, along with a malicious link which captures their username and password. Meanwhile, there has been a rise of deepfake videos in the past year – where AI is used to clone someone’s voice and likeness.

“There have been some reports that this is being used on individuals but at the moment it’s more with celebrities because it requires a large number of examples of the person speaking to a camera,” Dr Paxton-Fear told the Mirror. She cites the example of MoneySavingExpert Martin Lewis, whose face and audio have been used to sell fraudulent investment schemes, with a deep fake advert doing the rounds on Facebook.

Last year he appeared on Good Morning Britain to express his anger and outrage over the scams, and revealed he has had members of the public send him angry messages after believing it was indeed him who had conned them. Speaking to Ben Shephard and Kate Garraway, Mr Lewis said: “It’s an absolutely terrifying development.

“This is still only early stages of the technology and they are only going to get better… I am viscerally angry about this. These scams destroy lives. The impact scams have on mental health and self-esteem is huge. After all these years, how many time have I warned I don’t do adverts, we’re still having these conversations.

“Now it’s deepfake videos that are very plausible. We’re in a dangerous dystopian future…”

Dr Paxton-Fear echoes Professor Prince’s comments, saying: “We’ve also seen the rise of AI in general, by using tools like ChatGPT, crooks can tailor their attacks, having messages that target you specifically. While AI tools have tried to prevent this, often their solutions can be bypassed.

“This allows scammers to exactly target you, your job, or friends. All scams are designed to be easy to fall for and they always take advantage of our better nature to help others and do a good job, especially now these scams are extremely tailored to individuals.”

Expert advice

Unrecognisable person working on laptop in the dark.

Professor Prince advises readers to consider their social media presence. “Think about locking down your profile to limit information about you going out publicly,” he suggested. “Avoid accepting connections until you have verified who they really are.

“Think about your current connections, do you really need to stay connected to the person you met on holiday five years ago who you have never spoken with since then? Every connection is a way a criminal can get information about you.”

The expert also suggests thinking about how you verify messages and calls. “Being suspicious is not a bad thing, and if you are worried, just call the person directly or call their partner or someone who might know them,” he instructed. If you have been caught out, it is important to act immediately, as you will be more likely to get your money back.

Prof Prince recommends having your bank’s hotline number to hand. It’s important to notify the police, the National Cyber Security Centre (NCSC) or Action Fraud. He also suggests having complex passwords, using two-factor authentication and turning on security alerts to monitor where you are logging in and from what devices.

He also says to raise awareness among relatives and close friends. “Get it out in the open and discuss how you would ask for money etc – knowing this upfront will help you to detect things might not be right,” Prof Prince said.

“This needs to be discussed as this type of crime is becoming increasingly common and having these types of conversations with those closest to you – including any children – may make the difference between some you care about getting scammed or not.”

Dr Katie says to never be afraid of asking people a security question, like your bank would, such as ‘When did we last meet up?’ or ‘Who is my line manager?’ if it’s a dodgy message at work. “If you get a reach out from someone high up in your company, always check with your IT or security team,” she reiterated.

“Never trust a WhatsApp number claiming to be someone you know and always remain vigilant. Deepfake videos will look weird, there may be a robotic-sounding voice, and the movement will seem unnatural. Do your research before investing any money into a scheme or cryptocurrency, don’t trust a celebrity alone. If something seems too good to be true, it probably is.”

News Related-

Fix water crisis, or else, City warned

-

Giving Tuesday: How to donate to a charity with purpose and intention

-

Visiting South Korea? Get your culture fix in this artsy street in Seoul

-

Traffic advisory issued ahead of PM Modi's Hyderabad roadshow today

-

How to improve teaching of English in primary schools

-

How to deep clean a small bedroom according to experts

-

Now you know how tough being in govt is, Puad tells PKR

-

How to make a Hummer even flashier: strap a Rolls-Royce on top

-

How to crack the zodiac code: Use your birth date to understand yourself better

-

Ravens vs. Chargers Sunday Night Football live updates: Odds, predictions, how to watch

-

How To Watch The 2023 BET Soul Train Awards

-

Stimulus Check for Senior Citizens: How to qualify for a $2000 payment?

-

How to watch Faraway Downs: stream the Baz Luhrmann miniseries

-

How to Watch Today's Cleveland Browns vs. Denver Broncos Game: Start Time, Livestream Options